In natural language processing, Word2Vec is one of the most widely used vectorization techniques used for transferring words or textual data into vector or numerical form to feed it to the machine learning model and train the model. It has certain advantages over the BagofWords and TfIdf vectorizers which make more advanced and accurate vectorization techniques.

In this article, we will discuss the Word2Vec vectorization techniques, what is the core intuition behind it, how it works, the code example of the same, and the limitations of the technique. This article will help one to understand the Word2Vec vectorizers from scratch and will be able to use them wherever necessary.

Table of Content

- What is Word2Vec?

- How Does Word2Vec Work?

- Types of Word2Vec

- Advantages of Word2Vec

- Key Takeaways

- Conclusion

What is Word2Vec?

As we know that machine learning algorithms require good quality and quantity of data in order to train and build the most accurate and reliable model. And also, we can not directly feed the textual type of data to the machine learning models, as it is not the acceptable format for the statistical models. Hence we are required to transform the textual type of data into a numerical form or vector form to feed the data to the model and get the model trained on the same.

For such cases, vectorization techniques are used, which transform these textual data into vector form by applying their own logic. There are many vectorization techniques available, like a bag of words, TfIdf, and Word2Vec, but among all of them, the Word2Vec is one of the most accurate and widely used approaches for vectorization due to its working mechanism and the advantages it offers over other vectorization methods.

How Does Word2Vec Work?

Unlike other vectorization techniques, Word2Vec vectorization works with the help of neural networks, which makes it more accurate and precise. Here our main aim is not to transform the word or text into a vector but to predict the next word to the middle word.

Here we take a dummy problem statement in a neural network, where we apply the neural networks to solve the problems, and while solving the problem, we get the vector form of the words as a by-product.

There are different types of Word2Vec vectorizers where different neural network architectures are used, and different problem states are taken to get the vector representation of the textual data.

Types of Word2Vec

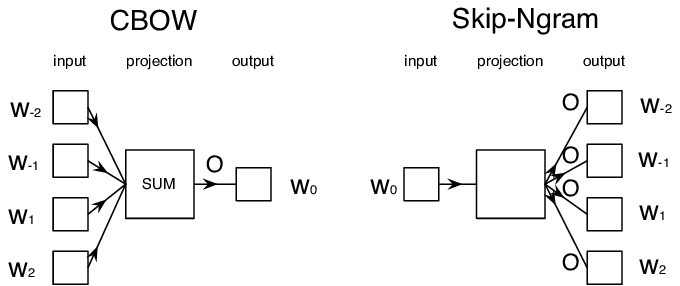

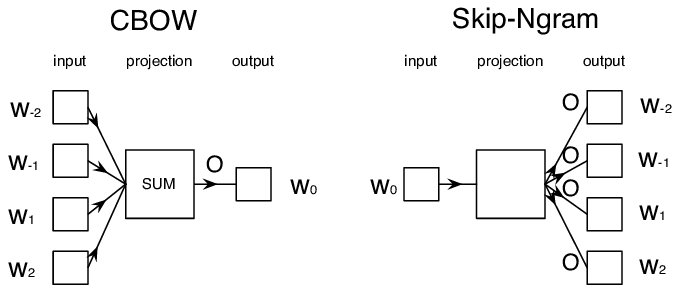

There are two main types of Word2Vec: 1. CBOW 2. Skipgram

In both of these Word2Vec vectorizers, different neural network architectures and different problem statements are taken.

CBOW

In the CBOW type of Word2Vec vectorizer, we pride t one word or the ideal word of the sentence with the help of other neighbors’ words.

Let us try to understand the same with an example. Let us say we have a sentence:

“PythonKitchen is Best”

Now here, we will not directly feed this sentence to the Word2Vec and get the output from the same; instead, we will create a problem statement here, that we will predict the middle word from the sentence, with the help of left and right words, and the middle word will be dropped by us.

Now we will apply neural networks in order to solve the same problem, and while predicting the middle word in the sentence, we will get the vector form of the word in the last layer of the neural network itself.

As we can see in the above image, the input words are fed to the neural networks model, and the model will predict the middle or the missing words. Now in the last layer of the network where we are getting the output, we will get the vector form of the word as well.

In general, the CBOW type of Word2Vec vectorizers works well with larger datasets.

Skipgram

In Skipgram, a type of vectorizer, we basically drop all the neighboring words and just keep the middle word which will be used to predict all the other words which are missing.

Let us take an example.

“PythonKitchen is Best”

In this sentence, we will just keep- the word “is” in the dataset, and all the other neighboring words will be dropped, and the same neural network will be used to predict these words. Now while predicting, we will get the vector forms of the words in the last layer of the neural network itself.

As we can see in the above image, one word is fed to the neural network model, and the model will predict all the other words where the vector form of the words will be obtained in the last layer.

In general, the skip-gram type of Word2Vec vectorizes works well with small datasets.

Advantages of Word2Vec

Semantic and Synthetic Meaning of Words:

The Word2Vec vectorizer can capture the patterns of different words and can, identify similar words and assigns them similar vector representations, which ultimately reduces the dimensionality of the dataset and make the model less complex.

Handle Small and Large Datasets:

The woprtd2vec can be used for both large and small datasets, where CBOW is mainly used in the case of the larger dataset, and Skipgram is used in the case of smaller datasets.

Handling Rare words:

Word2Vec can also handle the rare words which are not present in the training data and can generate the vector representations for the same. Here, the Word2Vec uses the subword information techniques technique, where it breaks the words into parts and generates the vector form of the same, with the help of other similar words in the training data.

Efficiency:

The Word2Vec vectorizer is comparatively efficient as it rains in less amount of time with large datasets, as the neural network used in the vectorizers contains less4er parameters.

Key Takeaways

- Word2Vec is a type of vectorization technique used to transform the textual type of data into vector form.

- CBOW and Skiopgram are the type of Word2Vec vectorizes where different neural network architectures and different problem statements are considered.

- CBOW is preferred for large datasets, and Skipgram is preferred for smaller datasets.

- Word2Vec can identify similar words and can represent them in vector form in a very efficient manner so that the dimensionality can be handled and the model does not become so complex.

- Word2Vec can also handle rare qwords where it uses the subword information in order to generate the vector representations of unseen words.

Conclusion

In this article, we discussed the word2vec vectorizes, what it is, how it works, the type of Word2Vec, and the advantages of the same. This article will help one to understand the vectorization technique more deeply and will be able to answer interview questions related to the same very easily and efficiently.